The World's Fastest Docker Builders

WarpBuild's new docker container builders combine high performance processors with directly attached SSDs to deliver the fastest docker builds in the world.

WarpBuild's new docker container builders combine high performance processors with directly attached SSDs to deliver the fastest docker builds in the world.

First principles

The hardware

The cloud instances have amazing network speeds, but the disk speed is often a limiting factor. There are two types of instances that cloud providers offer:

-

Ones with local SSDs - The local SSDs are fast, but they come with a catch - the local SSDs are ephemeral. This means that the disk is lost when the instance is terminated. This requires the context to be persisted elsewhere which becomes a bottleneck and a maintenance nightmare.

-

Ones with network-attached SSDs - The network-attached SSDs are high-latency, but the size is not limited. The performance in terms of IOPS and throughput is low by default but can be improved though that becomes extremely expensive.

Processors are fast, specifically the Genoa-X EPYC processors compared to the intel processors (m7a on aws, c4d on gcp, and dasv6 on azure). However, for quick builds, the IO is the limiting factor. The processor speeds show a significant improvement to overall build times when the the build step is time consuming (as opposed to the IO operations).

A quick aside: The m7a and dasv6 instances have roughly the same single core performance when tested on Passmark (~2900). However, the c4d instances have a single core performance of ~3400. While benchmarks are not everything, this is a good indicator of the performance difference.

Logical optimizations aka caching

The fastest network or disk transfer is one that is not needed. Caching can eliminate the need for some of the transfers. The more we can cache, the faster the build.

What makes a fast docker builder?

The (interesting part of the) lifecycle of a docker build is as follows:

- Transfer the context to the builder instance

- Download the base image

- Download the dependencies

- Build the application

- Push the image to a container registry

All the steps except the actual build step are network or disk IO bound. The actual build step is processor and memory bound, though it can also depend on the disk IOPS in certain cases.

But it builds fast on my laptop

That is true, because your typical Macbook has an extremely high single core performance processor and an extremely high IOPS disk (SSD). The network speed may be low, but the entire context, the base image, the dependencies, and some parts of the build step are cached and readily available.

How do we replicate this, but on a cloud instance?

The answer is simple - attach a high performance disk to a high performance CPU instance and pair it with a fast network.

Doing this is inefficient in a public cloud environment, because the disk is ephemeral and the cost of the instance is high.

The solution

We went baremetal. We bought high performance CPUs and attached large volumes of extremely fast SSDs to them. We then paired it with a blazing fast network.

We then created a custom orchestration layer on top of that to manage the lifecycle of the docker builders.

This orchestration layer is built ground up with the following capabilities:

- Complete caching for maximum build speed.

- Dedicated cores of fast, high single core performance processors.

- Storage that is directly attached to the builder instance to ensure that the disk IO is not a bottleneck.

- Nested virtualization to ensure that the builder instance is completely isolated from the host.

- Managing the storage lifecycle even with parallel builds.

Managing the storage lifecycle is a non-trivial problem. We had to ensure that we can handle parallel builds (currently limited but will soon be unlimited) and that the build context is available to the builder instance. Copy-on-write volumes are used to ensure that the build context is available to the builder instance with minimal storage overhead and fast builder startup times.

Performance

This was a lot of effort to get right, but the results speak for themselves.

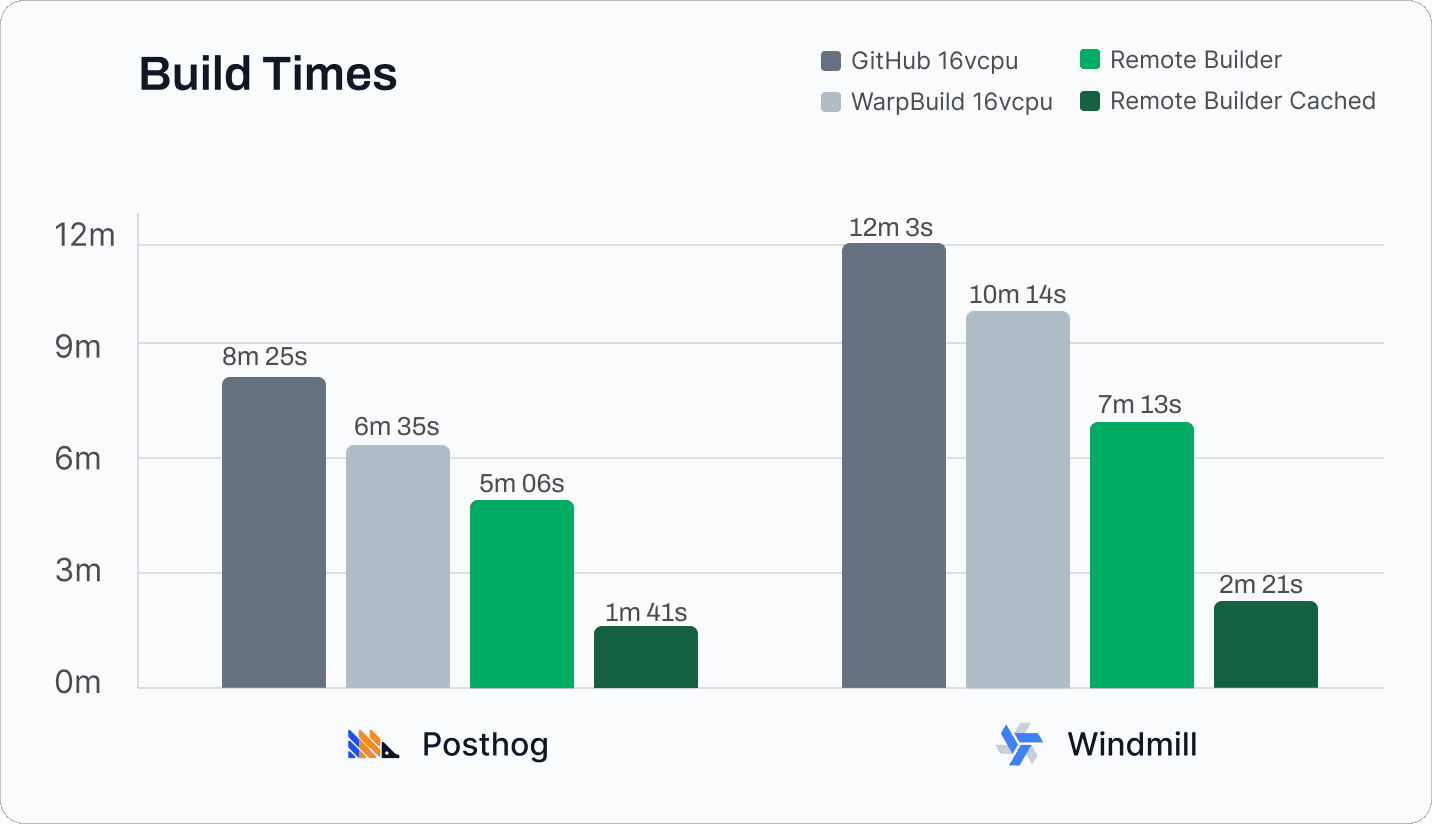

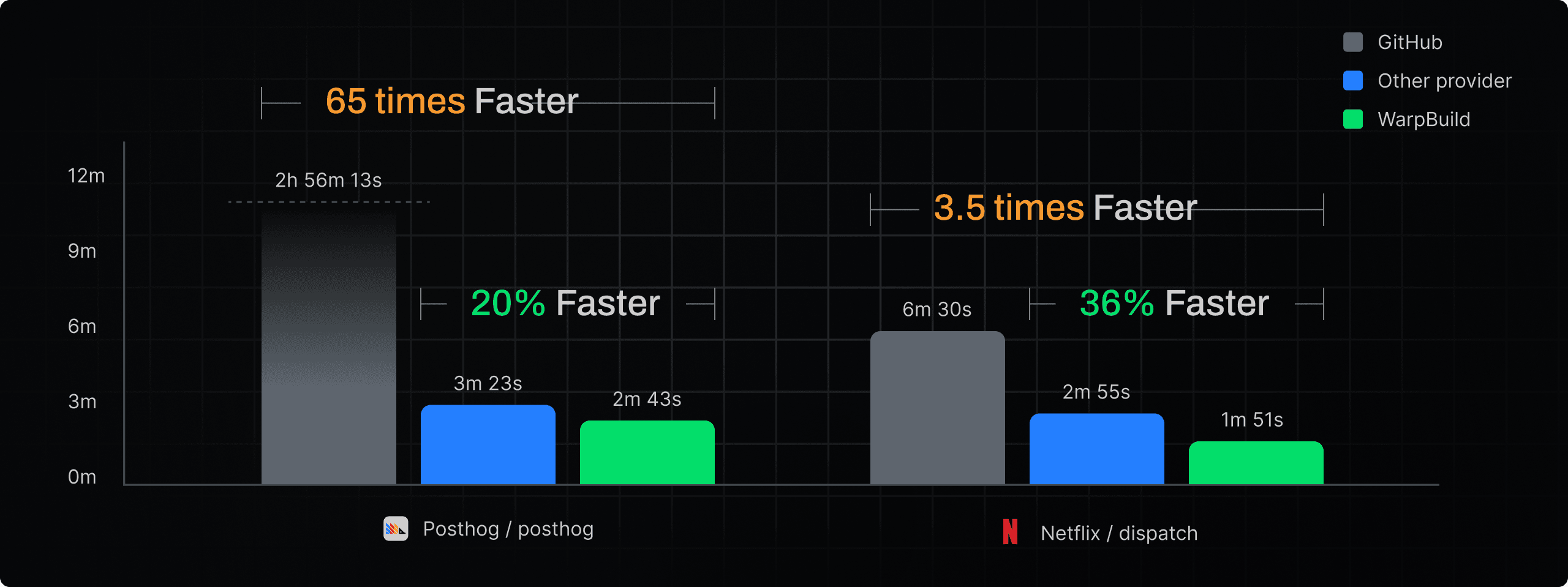

For the same commit on posthog/posthog and netflix/dispatch repositories, the docker builders are able to deliver build times that are upto 65x faster than the public cloud builders!

The chart above shows the build time comparison between using the default GitHub actions runners, another provider with builders hosted in the public cloud, and the WarpBuild docker builders.

Since launch, we have customers reporting real life build times reductions from 7:00 -> 0:45, 4:00 -> 1:30 and the list goes on.

🚀 Try it out

Create a builder profile in the WarpBuild dashboard and add the following to your GitHub actions workflow before your build-push-action step:

- name: Configure WarpBuild Docker Builders

uses: Warpbuilds/docker-configure@v1

with:

profile-name: "super-fast-builder"Try out WarpBuild's new docker builders

Last updated on